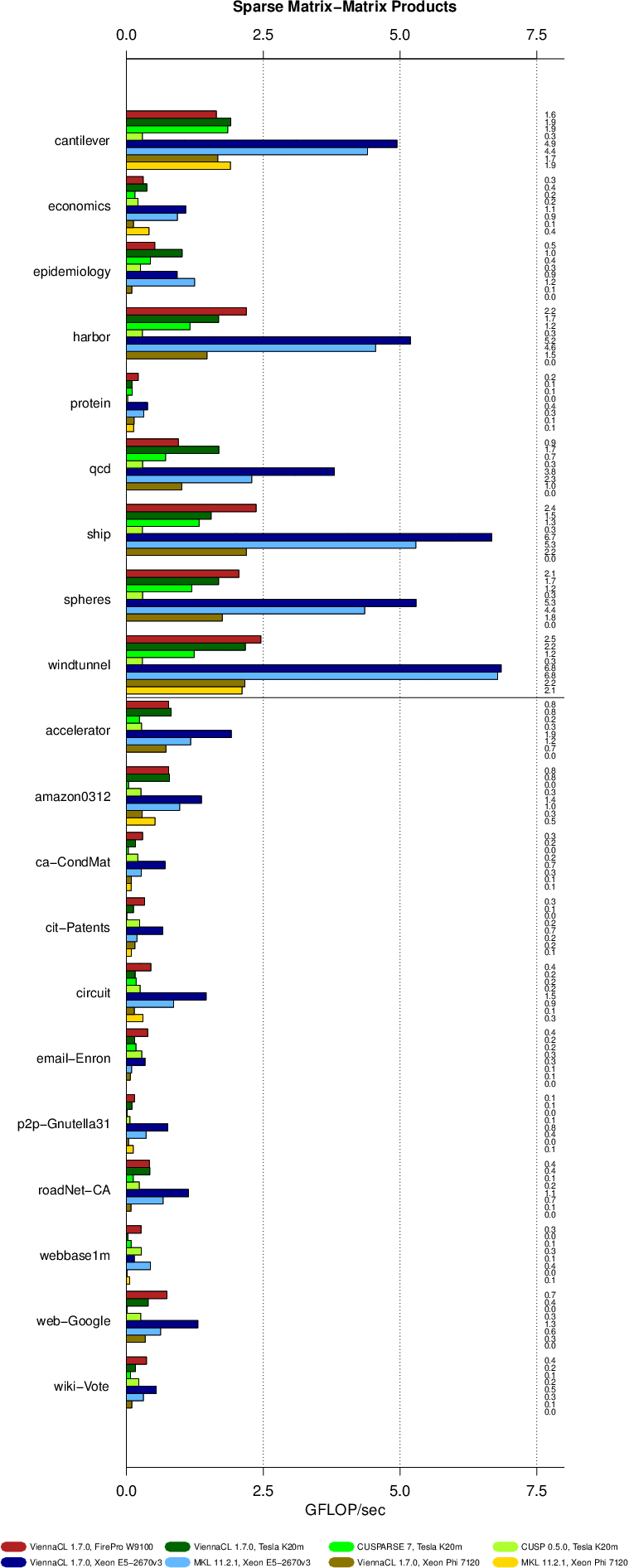

Sparse Matrix-Matrix Products

In addition to sparse matrix-vector products, sparse matrix-matrix products are important for applications such as algebraic multigrid methods and many graph operations such as subgraph extraction, breadth-first search, transitive closure, or graph clustering. ViennaCL provides a fast sparse-matrix-matrix products for matrices in compressed sparse row (CSR) format. A performance comparison on hardware from AMD, INTEL, and NVIDIA is given below. All benchmarks have been carried out in double precision arithmetic on Linux workstations.

Overall, ViennaCL's implementation is about two-fold faster than the implementations in cuSPARSE and CUSP on (geometric) average. A performance gain of 50 percent over INTEL MKL is obtained on average for the dual-socket Xeon Haswell system. ViennaCL's implementation is also competitive in terms of performance on the Xeon Phi, yet we could not run a full comparison against MKL because we encountered segmentation faults when running the MKL routines on the Xeon Phi.